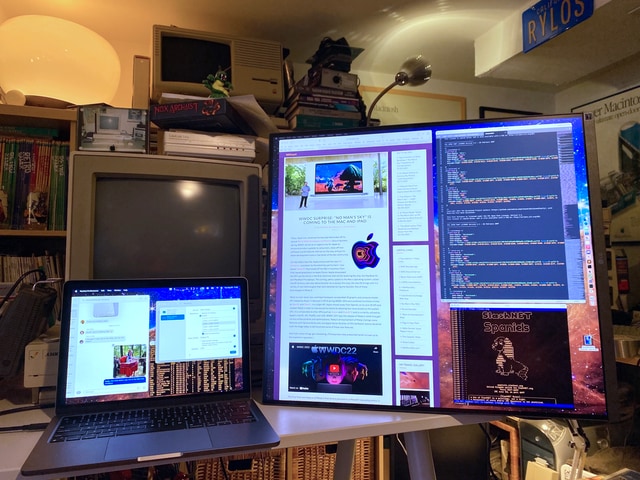

I found myself posting this information so frequently on forum threads and in video comments that I wanted to put it all together in one place so that I can share my experience and what I’ve learned with a single link. And, while this is primarily a vintage computing blog, not every post is of that nature. Apologies to my retro-only readers. (Well, there is an Amiga and a Lisa in that second photo down the page, there, so…)

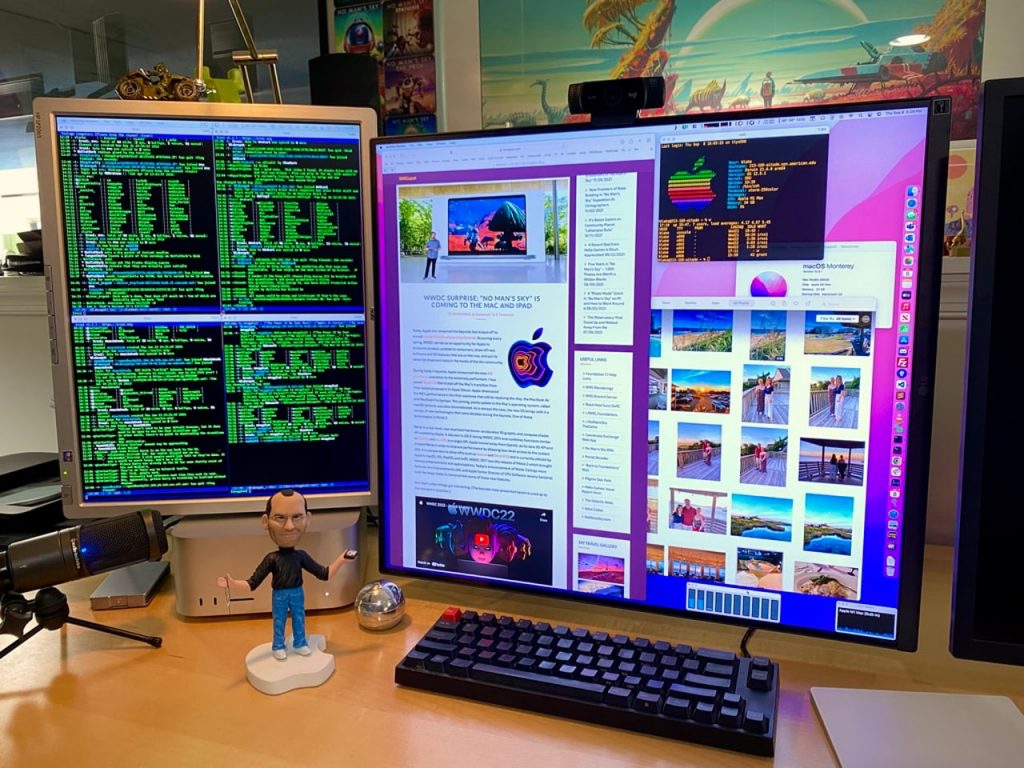

I recently replaced my aging 2017 5K Retina iMac with a Mac Studio, powered by an M1 Max processor and 32GB of RAM. Some months earlier I upgraded my aging laptop to a 2022 M2 MacBook Air. That iMac’s beautiful 27-inch, 5K retina display was hard to part with, and so I wanted to give the new desktop system a very nice screen. And I have, in the form of an LG DualUp 28-inch display.

It’s an unusual display; it is a 16:18 aspect panel with a native resolution of 2560×2880. It is “tall” in its normal orientation, but I’ve chosen to use it rotated which makes it look rather square (a great orientation for this display). A perfect screen for my use cases as a general UNIX system and web development workstation. (I do not game on my desktop Mac nor do I watch feature films, so a more traditional 16:9 aspect ration has little value to me here.) I’ve had this system up and running for two months now and I can say that, of all the displays I’ve ever used, this one is my favorite.

Doing research to spec out the screen for this iMac replacement, I encountered a large number of people lamenting the use of 4K displays with Macs, noting that the Apple 5K displays, with a 218 pixel/inch density, allow for a computationally easy halving of the native display resolution to achieve an ideal desktop rendering. A 5K Apple display has a native resolution of 5120×2880 and the default view mode is a “looks like” 2560×1440 desktop — razor sharp. Halving the native display resolution is easy peasy for GPU hardware — a small lift. This is, presumably, why Apple’s Retina displays are 5K rather than 4K. The thing with 4K displays is that in their typical native 3840×2160 resolution, they present the UI elements far too small, while the system-suggested half-resolution rendering is a sharp “looks like” 1920×1080 display where the UI elements appear too large — there’s too little desktop real estate.

The way around this is to have macOS “scale” the display to a more ideal lower resolution, but choosing that option in display preferences presents a warning: “Using a scaled resolution may affect performance.” What the OS does here is to scale up the chosen resolution to double height and double width (4x the pixels displayed) and then scale them back down to the display’s native resolution — 60 times per second. Indeed, this can be too much for certain older systems out there. But, as you will see, modern Macs should be able to handle the task just fine.

My display, which has a ~140 pixel/inch density (close that of a typical 4K display), is scaling to a “looks like” resolution of 2304×2048. This means that macOS renders my desktop at 4608×4096 (18.9 million pixels) and then scales it down to the panel’s native (rotated) 2880×2560 — 60 times per second. (The “looks like” target for a standard 4K display with a native resolution of 3840×2160 will typically be 2560×1440 and, thus, it gets scaled up 5120×2880 (14.7 million pixels) and then back down to the panel’s native resolution.) This all sounds harrowing, and many threads of concern have been created, talking about the dramatic performance impact that this scaling busywork necessarily has on the system. Among them is this frequently cited video created by a person who indicates that the performance of their M1 MacBook Pro was so dramatically impacted by a scaled 4K display that they returned it and got a more traditional, low-DPI 2K display.

This guy’s experience makes no sense to me.

My LG DualUp display arrived a few weeks before the Mac Studio for which it was purchased. In that time, to test it out, I attached it to my M2 MacBook Air. As expected, the native rendering presented the macOS UI in a far-too-tiny fashion and the system’s suggested HiDPI “looks like” half-resolution of 1280×1440 was too large, too low res. In order to unlock myriad other scaled resolutions to choose from, I reached for the excellent BetterDisplay. This handy app, among various other features, allows you to unlock many additional low- and HiDPI- resolutions in the system display settings to choose from and, in the end, I went with a “just right” resolution of 2304×2048, as mentioned earlier. The M2 Air drove the display perfectly, and everything felt just glass smooth — no performance issues anywhere to be seen.

Still, I saw such concerned discussion about macOS scaling, in various places online, that I wanted more assurance. I noticed that the author of BetterDisplay has a Discord channel to support users of the app. I joined it and had a number of conversations with him about my concerns. I showed him the aforelinked video of the distressed YouTube user reporting to have experienced such dire 4K performance issues on his M1 MacBook Pro. He felt surely that something else must be amiss with his configuration.

He explained,

The M1/M2 is specifically designed to be super efficient when it comes to macOS desktop rendering. M1 Pro+ macs can drive multiple 5K displays alongside a 4K display simultaneously, all with scaling involved so that can result in huge framebuffers. So I think driving a single display with a 5K-6K framebuffer with scaling is way below the limitations of these machines.

…

If we try to make sense of it, a 5K framebuffer has about 15 million pixels. So at 60fps about 900 million pixels must be processed. Let’s say 1 billion pixels. If we say that scaling is done super inefficiently, let’s say every pixel requires 5 floating point operations to be scaled. That is 5 billion operations per second. Now the M1 Pro can do about 5 teraflops — that is 5 trillion (5 000 billion) operations per second. That means that all the scaling stuff will consume 0.1% of the capabilities of the GPU. Even if there are additional inefficiencies and super wasteful processing, so everything requires 10x as much processing, then the desktop scaling will keep occupied the GPU 1%.

Even if somehow the 1% would turn into 10%, still 90% of the GPU is free. So surely scaling will not make a smooth video playback drop to 1fps in any circumstance. It would be such an obvious issue that I think everybody would know about it.

As I stated, the M2 Air drove the DualUp display without incident. I viewed 60fps 4K videos, ran a few games, scrolled wildly through my massive photo gallery, swiped through five different virtual desktops — all smooth. My own observations of what was happening right in front of me revealed zero cause for concern, and the sound reasoning I heard in that Discord further put me at ease. And, as you might guess, the Mac Studio where the DualUp now lives also handles the scaled display, along with an unscaled secondary display, with complete ease.

A recent video by Kyle Erickson, whose tech videos I enjoy, should also help allay such concerns for those who have them.

UPDATE: As reader JohnS points out in the comments, Apple takes the fractional, non-2x scaling path themselves on some of their current systems,

The whole thing is bunk, because Apple uses a scaled resolution that is not X2 on the MacBook Air. If performance was such a factor Apple would have defaulted to a X2 scale 1280×800. To go further, Windows PC’s have basically used fractional scales on notebooks for years. Many times using x1.25% or 1.50% and same for higher resolution screens….

It seems to be the case that recent, modern Macs — and not just those with Apple Silicon — can handle HiDPI scaling without issue.

Great write up and hopefully will make concerned folks stop worrying. This whole issue has been blown way out of reality; it’s taken on MaxTech levels of hyperbole with people freaking out over nothing!

It should be quite obvious that this isn’t a significant burden as lots of apple laptops use scaled resolutions by default even in older and way less powerful intel processors.

Thanks for the explanation helped me a lot!

I would like to know about text clarity. Does text look crisp/clear on LG DualUp comparing with iMac 5K screen or LG Ultrafine 5K Display?

Scaled properly, it looks very clear, but it is not as absolutely razor sharp as a 5K or 6K display.

The issue isn’t the performance (Although it’s annoying as well)

The issue is that the screen is scaled. It’ll look blurry and uneven. This defeats the purpose of a high-dpi screen in the first place.

Why not get a lower dpi screen that is cheaper, faster and looks sharper on macOS?

Rendering a 5k image onto a 4k screen

won’t be slower than rendering a 5k image onto a 5k screen.

But it’ll look worse then rendering a 1440p image onto a 1440p screen.

And that last part is the frustrating one.

Well, performance isn’t a particular issue for modern systems as I’ve tried to illustrate with this blog post. And as for your suggestion that it will look “blurry and uneven,” that would indeed be the case if the image was not rendered at a much higher resolution that the native 4K screen resolution and then scaled down to that native resolution. That’s the “HiDPI” macOS scaling we’re talking about here.

My screen is basically a 4K as far as its DPI, but it’s an odd aspect ratio. So, let’s take a more typical 4K screen, which is 3840×2160 pixels @ 16:9 aspect ratio. macOS sees this screen and defaults it to a “HiDPI” mode of 1920×1080. If it rendered at the native 3840×2160, on the typical ~27-inch 4K display, the UI elements and text would be much too small for most people’s liking. The “HiDPI” label indicates that it’s internally rendering the desktop at twice the height and twice the width (4x the pixels) of the 1920×1080 (which happens to be the native resolution of the display) and scales it down to the panel’s native resolution, which is exactly half the height and width of the internal upscale which, being exactly 50% on both axes, makes the image razor sharp. But the UI elements are now too big; there’s too little real estate on the desktop for most people’s liking.

Most users would desire a 2560×1440 desktop in this scenario. Here, a user could set the Mac to output a literal, non-HiDPI (not scaled internally) 2560×1440 to the 3840×2160 panel. In this scenario, the panel hardware itself would upscale the video to its native resolution and, given that this is not an exactly-twice-resolution upscale, it will look somewhat as you say “blurry and uneven.” (It’s also the case that macOS probably upscales images more cleanly than the firmware routines in said panel, even in this non-HiDPI scenario.)

The solution is to choose a HiDPI resolution of 2560×1440 in macOS. What this does is render the desktop at 5120×2880 internally and then scale it down (60 times per second) to the native panel resolution of 2840×2160. Here, the resulting image does not look “blurry and uneven” and it looks significantly better than a lower dpi screen with a native 2560×1440 panel. Trust me on this; I have a lower dpi, 2560×1440 native display sitting two inches to the right of my Mac’s display here on the desk, which is attached to a Windows PC that I use exclusively for gaming.

I use this LG DualUp display (which, for the purposes of this conversation, is a “4K display”) every day, all day. I write code on it (job) and do all manner of other things (typical use), etc. I use lots of small-text terminal windows, what’s more.

I directly replaced my 27-inch 5K Retina iMac with this Mac Studio and this display and I can say with conviction that this display looks negligibly less clear to me than the iMac’s 5K Retina display did. I wear glasses, and with them on my vision is perfect. It looks incredibly sharp and uniform and I find the fidelity of the image shockingly good, and a nice bonus is that this is a matte screen and so lacks the high reflective glare of the iMac’s display. If I get very close to the screen and look at the individual pixels of text on the display, I can see that the 5K (when scrutinized at so close a range) was sharper, but only on such close inspection can I feel this.

That said, I like the unique aspect ratio of this display so much more than the typical 16:9, that I consider this to be the best display I have ever used. For me, the aspect ration makes far more sense than a widescreen display. The hint of a difference between it and a true 5K under 5-inches-from-the-screen observation is so irrelevant and typically unnoticeable to me that it is not a factor at all.

I hope this helps.

Using the latest version of Ventura and with Show all resolutions selected. Im able to see double entries for some resolutions with one of the duplicates adding (low resolution) which gives my 4k monitor blurry text but at the same resolution the other does not. Might the wrong one be selected?

Thanks you so much for this. I’ve watched the videos you linked here as well as this one (https://www.youtube.com/watch?v=jaWpq-dNVro) and ended up really confused. This message cleared things up for me.

one question though, when you said:

“Most users would desire a 2560×1440 desktop in this scenario. Here, a user could set the Mac to output a literal, non-HiDPI (not scaled internally) 2560×1440 to the 3840×2160 panel. In this scenario, the panel hardware itself would upscale the video to its native resolution and, given that this is not an exactly-twice-resolution upscale, it will look somewhat as you say “blurry and uneven.” (It’s also the case that macOS probably upscales images more cleanly than the firmware routines in said panel, even in this non-HiDPI scenario.)”

what did you mean by native resolution? Did you. mean 4k or 1080? because earlier you used native resolution to mean 1080. But since you used the word upscale I think you meant 4k right?

This is exactly my experience. I switched to Mac from Windows, and as I already had 3x 4K monitors, I was concerned about how they’d play with notorious scaling issues on MacOS.

I need not have worried – rendering at 5120×2880 and downscaling to 3840×2160 produces results almost as crisp as Window’s 4K output with 150% scaling. In both cases, it’s the equivalent of 1440p, perfect for 27″ 4K screens.

As for performance, I’m running an M1 Max, and it barely seems to notice that it’s driving 44 million pixels (3x 5K) plus downsampling.

Does anyone know in what verison of MacOS Apple added the HiDPI resolution options? Surely, the widely publicised issues must have been before this was an option – when people were outputting 1440p to their 4K monitors.

Thank you for collecting all this information. I personally use 2k gaming monitors and had initially thought about changing monitors because I was seeing blurry text at the native resolution of 2560x1440p. Then I discovered BetterDummy, now BetterDisplay and set a resolution of 2048 x 1152 which translates to 4096 x 2304 HiDPI. This seems to me to be the best option for a 27” 2k monitor and I can even take advantage of the 100 hz of the display without having experienced any performance drops.

Nevertheless, I find it really sad that apple does not handle scaling without third-party software, although BetterDisplay is a great OpenSource software.

But if you were using 1:1 scaling then there is nothing to cause blurring. I’m a bit confused

I just got the Dual Up & downloaded the software you mentioned. However, I could not seems to find the HiDPI resolution of 2560×1440. The max I got is 1440 x1280 under HiDPI. Can you tell me what I am missing? I am using Mac M1 by the way. In addition,I have to rotate 170 degree to get the orientation similar to your picture. Thanks!

If you haven’t solved the issue yet, try this: go to BetterDisplay settings, to the App menu tab, find the resolution section and check the show all resolutions option.

I still didn’t see it. I can only find show additional resolution option, but even then I couldn’t find 2560 x1440 under HiDPI. I can see it under non-HiDPI though.

It took me 2 weeks from the time I visited the Apple store to find out why all the Macbook Air screens looked blurry to me. After that 2 weeks of research, and from what I can tell, it seems the issue is Apple display scaling. To me the picture looks like someone is sharing their screen over teams, like there is some compression or resizing that screws up the sharpness.

Blake, Thank you! Yours is the best post I’ve seen on this “scaling” math/physics quagmire. I got A’s in upper level calculus but was still having difficulty understanding the issues of monitor “scaling”. I’m looking to update to an M2 but without a budget or creative need for an Apple monitor. The question of what monitor to buy left me swimming in the wormhole threads about 4K scaling. Thank you for throwing me a life raft to help float me to a monitor purchase decision.

I don’t know why I have such difficulty understanding this. I have watched Kyle’s video and others on the topic many times. If the Dell display monitor manager software says my resolution is 2560 x 1440 is my monitor still presenting me 4k data? If I change the display settings in Settings is it only affecting text?

Hi Blake,

I think the point from the guy on the video you referenced to was RAM consumption and not CPU usage.

I’m reading all about this because first I was looking into Apple Studio Display as an replacement to my 1920×1200 lenovo l220x monitor. But for me 2560×1440 will not be a huge gain in usable space.

So I’m looking into the DualUp because it will make me jump to a almost twice vertical space, even scale to (2304×2040).

Since you’re used to the apple 5k display which have real nice colors. How do you compare to dualup colors?

Also, do you think it’s feasible to use DualUp display at native resolution but compensate in increasing the general UI on macos settings/safari/terminal conf?

Once Apple moved to retina screens for creators the bar was set that Mac’s need a HiDPI screen to display MacOS properly since Apple has even removed the font smoothing option for lower resolutions.

Even MacOS looks different on a non HiDPI screen such as a 1080p.

Windows PC’s tend to be more in the 1080p or 1440p range still.

I just recently retired my 5k iMac and have a brand new M2 MacBook Air 15in. At my desk of course I want a bigger screen. Having spent a lot on my laptop I got a budget Samsung 4k screen. Display is great but I am just learning of all the pitfalls with scaling having been spoilt by my 5k Retina display. I have settled on the native 1440p resolution that Apple scales 2x and then down to 4k. it looks fine but my eye definitely notices less sharp fonts. I ran some of these Terminal commands which I used to use for non-retina displays and I have improved the sharpness greatly. Check out this link and you can easily reverse them if you don’t like them. https://osxdaily.com/2018/09/26/fix-blurry-thin-fonts-text-macos-mojave/

The whole thing is bunk, because Apple uses a scaled resolution that is not X2 on the MacBook Air. If performance was such a factor Apple would have defaulted to a X2 scale 1280×800. To go further, Windows PC’s have basically used fractional scales on notebooks for years. Many times using x1.25% or 1.50% and same for higher resolution screens. If graphics cannot handle scaling without performance hits then that hardware is really bad. I think people need to set scaling as best for their situation and forget about whether it’s a performance issue, because it’s not.

My man, you could not be more wrong and misinformed…

First of all the LG dual up is nowhere even in the same league as apple / lg 5k panels. So that was a major downgrade. The second point here is that scaling has little to do with performance concerns for most users. It has everything to do with display quality, sharpness, and graphics rendering. If you had a better display, NPI, you would notice it immediately.

You need to have a vision test, and you definitely need a studio display… You can also go with a dell or Samsung now. But i wouldn’t due to native controls and integration being so smooth.

You also went from a 10 bit panel to an 8 bit. So you’re taking a hit in the colorspace coverage as well.

Im just here in case people are reading in 2023. There is a reason why you need 220 ppi. That and glossy panels are what you should be buying for mac. If more people understood this, we would have a way better market for panels. Right now, gamers are driving the industry, and mac users barely got VRR in 2020.

A 10 bit, 60hz, glossy, integrated display of around 220ppi or you are doing yourself a major disservice on apple ecosystem.

Well, I don’t think I can be called wrong or misinformed, here. I am aware that the pixel density and color fidelity of the Apple 5K display surpasses this display notably. My last Mac was an iMac 27″ with 4K Retina display, so I am no stranger to very high quality Apple displays, even if that display falls short of the 5K Apple Studio Display.

I have long wanted a “square” (or close) display, and that’s what the DualUp pretty-much is. It has far more vertical real-estate than Apple’s 5K display, at the expense of width. I don’t game or watch full movie sessions on this system. I use it for general internet usage (where vertical real estate is amazing), web development, photo management, and the like. A nearly-square 28-inch display makes much more sense for these uses than wide and “short.”

I was very used to the 4K retina display of the iMac, I spent time with the 5K display in the Apple Store, and I find the image produced by this display which is 2880×2560 native, scaled up and back to 2304×2048 to be extremely crisp and I need to get very close in to see any pixels. I also far prefer a matte finish to a glossy finish, and lamented going from the nice IPS Apple Cinema Display 30-inch with its matte to the glossy iMac display, as far as it reflecting lights behind me. Is this LG DualUp as crisp and razor sharp as the Apple 5K display? No, I am aware that it is not.

Also, the Apple 5K display is $1,000 more expensive than this $600 LG (and $1600 turns to $1900 if one spring’s for the “nano-texture” glass (not to mention the rag)). Price is a factor for me, as well.

So, I would disagree that I “definitely need” an Apple 5K Studio display. If someone offered to trade me one for this LG display, I would decline. I think we value different attributes in our systems’ primary displays, and our use cases are likely rather different. Do enjoy your Studio Display!

You’ve actually been misinformed. The studio display does not have a 10-bit panel, and neither do any of the iMacs.

Also, using a ppi of 160-190 and sitting a little further back than the regular arms length is acceptable to a lot of people when cost is factored into the equation.

It’s 8-bit with FRC (aka temporal dithering), which is basically 10-bit. It’s a native 10-bit panel but the scaler firmware can emulate 10-bit and the GPU sends a 10-bit color depth signal. okay, it’s not as good as a native 10-bit panel. True.

Why would you prefer a glossy panel?

The main difference I know of being:

* glossy: brighter (?), but every light source visible on screen (annoying)

* matte: dimmer colors, but handles light sources much better

I’m not graphics designer, I’m a programmer -> therefore I don’t really care if the colors as as accurate as they can be (I’m using Flux most of the time anyway) -> so I don’t care about glossy (better?) colors.

Unless there’s any other reason?

I am also a developer and I consider the matte on this screen a huge plus. ACD 30 was matte, my other external screen is matte — I don’t get the glossy appeal. Not even for gaming, the gaming PC sitting next to my Mac has a curved, matte screen, as well.

https://flic.kr/p/2ocg96Z

Thanks for the article, full of very useful info.

I’ve read a lot regarding the 1440 vs 4k debate, but was wondering if you know how 1900×1080 would run on a mini m2?

I live in Japan and Dell monitors are by far the best value here.

I’m looking at a 23.8″ 1900×1080 Dell S2421HS, as it is only $100 new!

PPI is 92.

Would that be blurry? Is Better Display capable of making it look sharper?

Any thoughts on this would be much appreciated.

Thanks for sharing this info. I have a 38″ ultrawide monitor with 3840×1600 resolution. My 15″ 2015 Intel Retina Macbook Pro had no issues running it at native resolution, but any display scaling would cause the system to overheat and drop frames. However, I upgraded to an M1 Pro Macbook Pro and it handles this display, plus another 4k monitor with ease.

As others have pointed out, the issue with modern Macs is NOT performance, rather the artifacts that come from scaling it up to 5k and then back down again. Doing it this way is idiotic. Literally no one else does this except Apple.

The proper way to do HiDPI is to use the displays native resolution and use Vector graphics for the UI so that there is no artifacting at any resolution or any scaling percentage; and the user can choose their own scaled resolution on a slider.

This is how Windows 11 and modern Linux distros using X windows handle HiPDI.

MacOS still uses rasterized graphics for the UI, so they literally can’t do this. Apple just doesn’t seem to care because, go buy their screens or F you guy.

Clickbait. I’m using same monitor 4k 27” with mbp m1 max and pc. At first view it looks ok on macos, it’s not blurry until you switch to windows. Same applications like chrome, evernote etc looks just better – I mean text. On mac the font is smaller, fatter, apple notes or craft looks bad and use like 1/3 of the screen estate.

It’s because windows can scale font, macos scales image 5k->4k, windows supports antyaliasing, macos not. End of story

macOS does support font anti-aliasing. Their algorithm for it is more akin to the look of a printed page while Microsoft’s aa leans more towards pushing the fonts toward the vertical and horizontal of a LCD display. Some prefer one over the other. (I prefer the macOS approach.)

If you are using a scaled resolution (say, with a 4K display), you can set what res you scale to and, as such, affect the overall size of fonts, UI elements, etc. And, when things are scaled the way macOS does it, everything is more-or-less anti-aliased.

Scaling is not always perfect on any OS. You have a panel with a set number of pixels and a pre determined native resolution. HiDPI screens require some amount of scaling otherwise text is just so small. OS developers use different types of means to scale with varying amounts of success. Integer scaling such as 200 or 300% is best because it works perfectly with pixels. Non- Integer scaling 125,150,175% tries to split up a pixel into fractions which is impossible so processes such as anti aliasing are used. This produces what some complain as soft or even blurry text. Its always better to wind up using integer scale factor over non-integer. This involves some homework choosing the right HiDPI screen for the OS being used. Windows does seem to do a little better with fractional scaling but its not without its own issues.

Just a thought. Why not render in high enough resolution so you can avoid the non-integer scaling with uneven lines additional anti-aliasing . In the common referred case with 27″ 4K monitor and desired “desktop resolution” of 1920×1440, you would render 3x desired resolution 7680×4320 and then scale to half size 3840×2160 which happens to be the native resolution of 4K monitors. Yes, there are more pixels to render, but scaling should be much faster.